I’m one of those people who thinks that “artificial intelligence” is a misnomer. So is “machine learning” and, I hasten to admit, I sometimes extend this line of criticism all the way to such familiar things as “computer memory” and “programming language”. That is, I’m a bit of a kook. It is my deep conviction that computers can’t think, speak, or remember, and I don’t just mean that they can’t do it like we do it; I mean they can’t do it at all. There is no literal sense in which a machine can learn and, if we are to take it figuratively, the metaphor is stone dead. Much of the discourse about the impending rise of artificial general intelligence these days sounds to me like people earnestly claiming that, since they have “legs”, surely tables are soon going to walk. We have not, if you’ll pardon it, taken the first step towards machines that can think.

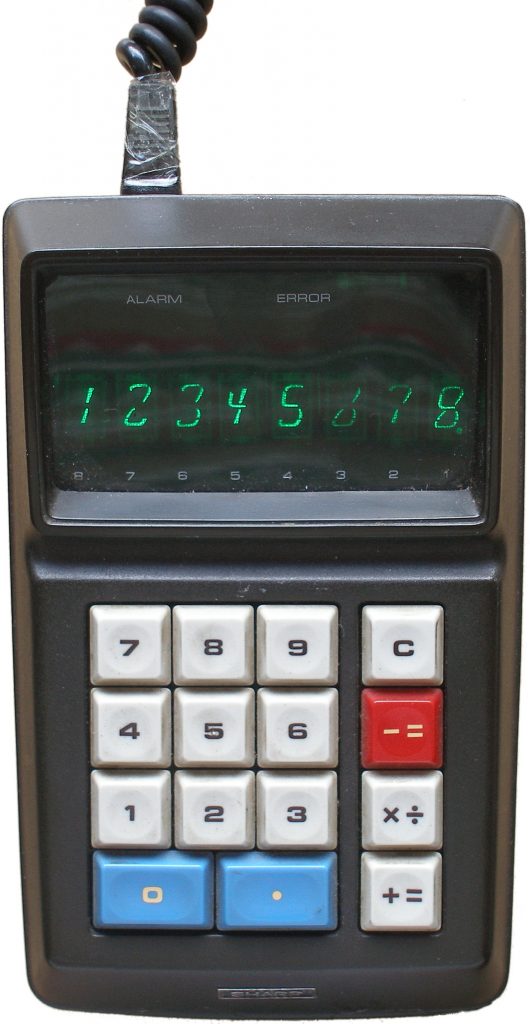

To fully appreciate my seriousness on this point, let me try to persuade you that pocket calculators can’t do math either. They have a long and very interesting history and, until I looked into it, I didn’t realize that it was actually Blaise Pascal who invented the mechanical adding machine – the Pascaline — in 1642. But, before this, there was always the abacus, and before this there was the root of the word “calculus”, namely, “chalk,” or the small limestone pebbles that were used as counters and eventually become the beads on the wires of counting frames. Interestingly, the word “abacus” originally denoted a writing surface of sand that was also used to do calculations. In any case, I hope you will immediately agree that a pile of pebbles or a string of beads can’t “do math”. A paper bag that you put two apples into and then another two is not capable of addition just because there are, in fact, four apples in the bag when you look.

Is it such a leap from this to the electronic calculator? Do we have to imbue it with “intelligence” of any kind to make sense of it? Some will point out that calculators use numbers and operators in their symbolic form as input and output. You type “2 +2 =” and you get “4” back. But suppose you have an ordinary kitchen scale and a bunch of labelled standard weights. Surely you can get it to “add up” the weights for you? Or you can imagine a plumbing system that moves quantities of water in and out of tanks, marked off with rulers so that the quantities are made explicit. Whether these systems are manual, hydraulic, mechanical, or electronic doesn’t change the fact that these are just physical processes that, properly labelled, give us an output that we humans can make sense of.

That’s all very well, you might say, but have I tried ChatGPT? How do I explain the perfectly intelligible output it produces daily? Here’s my take: it articulates words, like a calculator calculates numbers. To calculate is basically just to “keep count”. To articulate is just to “join up”. A machine that is capable of keeping count is no more intelligent than a paper bag, nor is a machine that is capable of joining words together. Imagine you have three bags, numbered 1, 2, 3. In bag number 1 there are slips of paper with names on them: “Thomas”, “Zahra”, “Sami”, “Linda”. In bag number 2 there are phrases like “is a”, “wants to be a”, “was once a”, and so forth. In bag number 3, there are the names of professions: “doctor”, “carpenter”, “lawyer”, “teacher”, etc. You can probably see where this is going. To operate this “machine”, you pull a slip of paper out of each bag and arrange them 1-2-3 in order. You’ll always get a string of words that “make sense”. Can this arrangement of three bags write? Of course not!

In a famous essay, Borges credits Ramon Llull with the invention of a “thinking machine” that works much like this. Or, rather, doesn’t work: it is not “capable of thinking a single thought, however rudimentary or fallacious.” Llull’s device (or at least the device that Borges imagined based on Llull’s writings) consisted of a series of discs that allowed the operator to combine (or “correlate”) agents, patients, acts. (I wonder if this is where the “robot rights” people got the idea of distinguishing between the moral “agency” and “patiency” of subjects.) “Measured against its objective, judged by its inventor’s illustrious goal,” Borges tells us, “the thinking machine does not work,” but, like all metaphysical systems, he also points out, its “public and well-known futility does not diminish [its] interest.”

The machinery has become a lot more complicated since Pascal and Llull. But I want to insist that it has not become more mysterious and, by the same token, not more intelligent. That is, we don’t need a “ghost in the machine” to explain how the prompt we give to a language model spurs it to produce an output that, on the face of it, “makes sense”. Just as the calculator has no “understanding” of numbers or quantities or the mathematical operations it carries out, so, too, does ChatGPT have no conception of what the prose it generates means. All it does is to convert a text into a string of numbers, those numbers into vectors in a hundred-dimensional space, in which it then locates the nearest points and, from these, chooses one of them at somewhat random (depending on the “temperature”). It converts the result (which is just a number) back into a word or part of a word (by looking it up in a table) and adds this to the string it is building. It repeats the process to find the next word. If “artificial intelligence” is a misnomer, what would I call these machines? They are electronic articulators.

I doubt this will catch on. In 1971, the year I was born, Sharp marketed its EL-8 calculator as “a really fast thinker” and I remember, ten years later, my elementary school principal announced that a computer (an Apple II+) would “moving in”. It was all very exciting.

“It is my deep conviction that computers can’t think, speak, or remember, ”

Do brains think, speak or remember?

It does seem strange to say that a brain “thinks”, as there are mentalistic terms that are part of our folk psychology theory of mind which seem appropriate to apply to “persons”, and not to brains. And vice versa, there are terms that are more appropriate to refer to what brains do rather than persons.

It seems that LLM’s may have more in common with brains than they do with persons. Like brains, they are computational devices (just electronic, not biological), and lack many of attributes associated with personhood. Though unlike brains, they have some features that emulate persons, such as the simulation of personal agency – “As an LLM, I…”.

This increases the nebulosity of whether these folk psychology terms are appropriately applied to LLM’s. But as part of folk psychology, it is folk that eventually will decide, and I suspect a losing battle for attempts to demarcate the “proper and correct” referents for these terms.

My quick and easy answer is that I’m agnostic about whether brains think and certain that people do. Folk psychology (as the name suggests) applies to people. I’m fine with that.

So, if we were able to add some more of the features that constitute “persons” to LLM’s, would it then be possible to attribute qualities like “thinking” to these systems? It seems like your answer would still be no, though perhaps it depends on the features.

“ChatGPT [has] no conception of what the prose it generates means. All it does is to convert a text into a string of numbers, those numbers into vectors in a hundred-dimensional space”

Where I take issue with arguments like these is that it seems like you seem to be framing a mechanistic account of a phenomena as a bad thing (“all it does…”). Yet the goal of the cognitive sciences is exactly this – if we are able to provide these kinds of mechanistic accounts to explain complex behaviours in humans then we should be very happy.

“we don’t need a “ghost in the machine”

I agree! Ditto with humans – but for some, terms like meaning/thinking/understanding etc… seem to function as a “ghost in the machine” that people want to keep in, not explain away.

A purely mechanistic account (which must, I assume, be causal) cannot capture what Kant called “intuition”, i.e., the fact that some objects of knowledge are given to us immediately. Technically, they can’t capture the “phenomena” since there is no place where the thing can appear. There is no “there there”; it’s just one thing after another. That’s what the human “ghost” contributes.

I am aware that cognitive science (and social science more generally) is forever trying to “explain it away”. I think this is a “bad thing” because persons are not just sites of experience, they are also loci of responsibility. The so-called “agency” of machines forever dissolves into media. Our humanity — each to each — is immediately present.